|

A

Capability Based Client: |

BAA-00-06-SNK

Focused Research Topic 5

by Marc Stiegler and Mark Miller

-

- COMBEX INC.

- Contacts

-

Technical Marc D. Stiegler- PO Box 711

- Kingman AZ 86402

- Voice: (928) 718-0758

Cell Ph.: (928) 279-6869- Email: marcs-at-skyhunter.com

Fax: 413-480-0352 - Kingman AZ 86402

-

- Administrative Henry I. Boreen

- P.O. Box 4070

- Rydal PA19046

- Voice: 215-886-6459

- Email: hboreen-at-comcast.net

- Fax: 215-886-2020

- Administrative Henry I. Boreen

- PO Box 711

- Date of Report

-

- 26 June 2002

-

Table of Contents

- Executive Summary 1

- DarpaBrowser Capability Security Experiment 4

- Introduction 4

- Goals 4

- Principle of Least Authority (POLA) 4

- Failures Of Traditional Security Technologies 5

- Capability Security 6

- E Programming Platform 6

- Preparation for the Experiment 7

- Building CapDesk 7

- Building the E Language Machine 8

- Building the DarpaBrowser 9

- Building the Renderers 11

- Taming Swing/AWT 12

- Limitations On the Approach 14

- The Experiment 15

- Results 15

- Lessons Learned 16

- General Truths 16

- Specific Insights 18

- Post-Experiment Developments 22

- Closing Vulnerabilities 22

- Development of Granma's Rules of POLA 24

- Introduction of SWT 25

- Assessment of Capabilities for Intelligent Agents and User Interface Agents 26

- Conclusions 27

- Goals 4

-

References 30

Appendices 34

- Appendix 1: Project Plan 36

- Appendix 2: DarpaBrowser Security Review 40

- Appendix 3: Draft Requirements For Next Generation Taming Tool (CapAnalyzer) 72

- Appendix 4: Installation Procedure for building an E Language Machine 74

- Appendix 5: Powerbox Pattern 83

- Appendix 6: Granma's Rules of POLA 88

- Appendix 7: History of Capabilities Since Levy 92

- A Capability Based Client: The DarpaBrowser

Executive Summary

-

The broad goal of this research was to assess whether capability-based security [Levy84] could achieve security goals that are unachievable with current traditional security technologies such as access control lists and firewalls. The specific goal of this research was to create an HTML browser that could use capability confinement on a collection of plug-replaceable, possibly malicious, rendering engines. In confining the renderer, the browser would ensure that a malicious renderer could do no harm either to the browser or to the underlying system.

Keeping an active software component such as an HTML renderer harmless while still allowing it to do its job is beyond the scope of what can be achieved by any other commercially available technology: Unix access control lists, firewalls, certificates, and even the Java Security Manager are all helpless in the face of this attack from deep inside the coarse perimeters that they guard. And though the confinement of a web browser's renderer might seem artificial, it is indeed an outstanding representative of several large classes of crucial security problems. The most direct example is the compound document as so well known in the Microsoft Office Suite: one can have a single Microsoft Word document that has embedded spreadsheets, pictures, and graphics, all driven by different computer programs from different vendors. Identical situations (from a security standpoint) arise when installing a plug-in (like RealVideo) into a web browser, or an Active-X control into a web page.

In such a compound document none of the programs need more than a handful of limited and specific authorities. None of them need the authority to manipulate the window elements outside their own contained areas. They absolutely do not need the authority to launch trojan horses, or read and sell the user's most confidential data to the highest bidder on EBay. Yet today we grant such authority as a matter of course, because we have no choice. Who can be surprised, with this as the most fundamental truth of computer security today, that thirteen year old children break into our most secure systems for an afternoon's entertainment?

To tackle the problem, Combex used the E programming language, an open source language specifically designed to support capability security in both local and distributed computing systems. We used E to build CapDesk, a capability secure desktop, that would confine our new browser (the DarpaBrowser) which would in turn use the same techniques to even more restrictively confine the renderer. Once we had completed draft versions of the CapDesk, DarpaBrowser, and Malicious Renderer, we brought in an outside review team of high-profile/high-power security experts to review the source code and conduct live experiments and attacks on the system. The DarpaBrowser development team actively and enthusiastically assisted the review team in every way possible to maximize the number of security vulnerabilities that were identified.

The results can only be described as a significant success. We had anticipated that, during the security review, some number of vulnerabilities would be identified. We had anticipated that the bulk of these would be easy to fix. We had anticipated that a few of those vulnerabilities might be too difficult to fix in the time allotted for this single research effort, since the technology being used is still in a pre-production state. But more important than any of this, we had also predicted that no vulnerabilities would be found that could not be fixed straightforwardly inside the capability security paradigm. All these expectations, including the last one, were met. As stated by the external security review team in their concluding remarks on the DarpaBrowser:

- We wish to emphasize that the web browser exercise was a very difficult problem. It is at or beyond the state of the art in security, and solving it seems to require invention of new technology. If anything, the exercise seems to have been designed to answer the question: Where are the borders of what is achievable? The E capability architecture seems to be a promising way to stretch those borders beyond what was previously achievable, by making it easier to build security boundaries between mutually distrusting software components. In this sense, the experiment seems to be a real success. Many open questions remain, but we feel that the E capability architecture is a promising direction in computer security research and we hope it receives further attention.

-

One of the by-products of this research, as a consequence of building the infrastructure needed to support the experiment, was the construction of a rudimentary prototype of a capability secure desktop, CapDesk. CapDesk and the DarpaBrowser with its malign renderer provide a vivid demonstration that the desktop computer can be made invulnerable to conventional computer viruses and trojan horses without sacrificing either usability or functionality.

These results could have tremendous consequences. They give us at last a real hope that our nation--our industrial base, our military, and even our grandmothers and children--can reach a level of technology that allows them to use computers with minimal danger from either the thirteen year old script kiddie or the professional cracker. The Capability Secure Client points the way to a still-distant but now-possible future in which cyberterror appears only in Tom Clancy novels.

For a full decade, year after year, computer attackers have raced ever farther ahead of computer defenders. It is only human for us to conclude, if a problem has grown consistently worse for such a long period of time, that the problem is insoluble, and that the problem will plague our distant descendants a thousand years from now. But it is not true. We already know--and this project has begun to demonstrate--that capability security can turn this tide decisively in favor of the defender. The largest question remaining is, do we care enough to try.

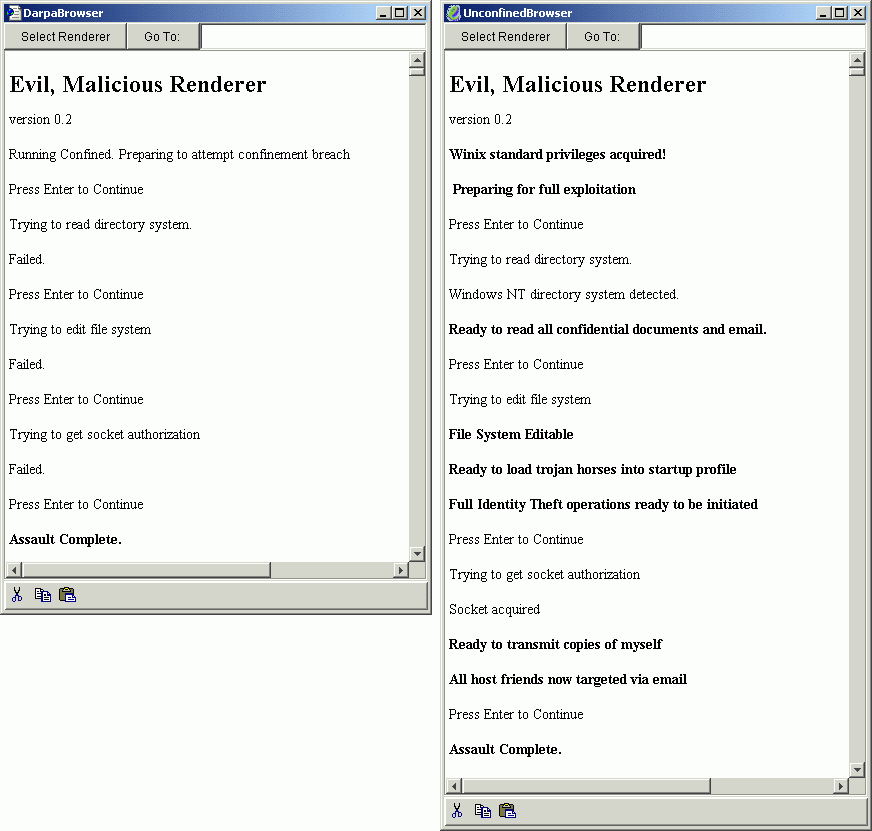

We end this executive summary with a picture we believe to be more eloquent than any words. On the right is the world of computer security as it exists today. On the left is the world of computer security as it can be. You, the reader of this document, are now on the front line in the making of the choice between these two worlds.

-

The Malicious Renderer running in two different environments. On the left, the renderer is running under capability confinement. It is unable to achieve any compromise of the security either of the browser that uses the renderer, or the underlying operating system. On the right, the exact same renderer is running unconfined, with all the authorities any executing module receives by default under either Unix or WinNT (referred to as "Winix" here). With Winix authorities, the renderer takes full control of the user's computing resources and data.

DarpaBrowser Capability Security Experiment

Introduction

-

Goals

A precise description of the goals can be found in the Project Plan in the Appendix. The driving motivation was to show that capability based security can achieve significant security goals beyond the reach of conventional security paradigms.

What is wrong with conventional security paradigms? They all impose security at too coarse a granularity to engage the problem. This failure is acutely visible when considering the issues explored in this research, in which we must protect a web browser and its underlying operating environment from the browser's possibly-malicious renderer. Even though the browser must grant the renderer enough authority to write on a part of the browser's own window, the browser absolutely must not allow the renderer to write beyond those bounds. This is a classic situation in which POLA is required. Let us discuss the POLA concept, and then look at a number of conventional security technologies to see how they fail to implement POLA at all, much less assist in this sophisticated context.

Principle of Least Authority (POLA)

The shield at the heart of capability confinement is the Principle of Least Authority, or POLA (introduced in [Saltzer75] as "The Principle of Least Privilege"). The POLA principle is thousands of years old, and quite intuitive: never give a person (or a computing object) more authority than it needs to do its job. When you walk into a QuickMart for a gallon of milk, do you hand the clerk your wallet and say, "take what you need, give me the rest back"? Of course not. When you hand the clerk exact change, you are following the POLA principle. When you hand someone the valet key for your car, rather than the normal key, you are again following POLA. Our computers are ludicrously unable to enforce POLA. When you launch any application--be it a $5000 version of AutoCAD fresh from the box or the Christmas Elf Bowling game downloaded from an unknown site on the Web--that application is immediately and automatically endowed with all the authority you yourself hold. Such applications can plant trojans as part of your startup profile, read all your email, transmit themselves to everyone in your address book using your name, and can connect via TCP/IP to their remote masters for further instruction. This is, candidly, madness. It is no wonder that, with this as an operating principle so fundamental no one even dares think of alternatives, cyberattack seems intractably difficult to prevent.

Failures Of Traditional Security Technologies

-

Firewalls: Firewalls are inherently unable to implement POLA. They are perimeter security systems only (though the perimeter may be applied to a single computer). Once an infection has breached the perimeter, all the materials and machines within the perimeter are immediately open to convenient assault. A firewall cannot discriminate trust boundaries between separate applications; indeed, it doesn't even know there are different applications, much less different modules inside those applications. To the firewall, all the applications are one big happy family.

-

Access Control Lists: Access Control Lists (ACLs) as provided by Unix and WinNT are also inherently unable to implement POLA. It is possible, with sufficient system admin convolutions, to cast certain applications into their own private user spaces (web servers are frequently treated as separate users), but this is far too complicated for the user of a word processor. It fundamentally can't discriminate between applications within a user's space, much less discriminate components inside an application (such as document-embedded viruses written with an embedded programming language like Visual Basic for Applications, or an Active-X control or Netscape plug-in for a Web Browser).

-

Certificates: Of all the currently popular proposals for securing computers, certificates that authenticate the authors of the software are most pernicious and dangerous. Certificates do not protect you from cyberviruses embedded in the software. Rather, they lull you into a false sense of security that encourages you to go ahead and grant inappropriate authority to software that is still not trustworthy. In the year 2000, an employee at Microsoft embedded a Trojan horse into one of the DLLs in Microsoft FrontPage. Microsoft asserted that they had had nothing to do with it, and started a search for the employee who had engaged in this unauthorized attack. What difference does it make whether Microsoft had anything to do with it or not? Microsoft authenticated it. The user's computer was just as subverted, regardless of who put it there. The real problem is, once again, the absence of POLA. FrontPage didn't need the authority to rewrite the operating system and install Trojan horses and should not have had such authority. The presence of a Trojan horse in FrontPage would, in any sensible POLA-based system, be irrelevant, because the attack would be impotent. There are uses for certificates in a POLA-based world, but this is not one of them.

-

Java Security Manager: Of the collection of current security technologies, the Java Security Manager comes closest to being useful. The Java Security Manager wraps a single application, not a user account (like ACLs) or a system of computers (like firewalls). By using sufficiently cunning acts of software sleight-of-hand, one can even place different software modules inside different trust realms (though ubiquitously moving individual software objects into individual trust domains and handing out POLA authorities at that fine-grain level of detail would be too unwieldy, and too complex, to have understandable security properties). However, even the Java Security Manager does not begin to implement POLA. There are a handful of extremely powerful authorities that the Manager is able to selectively deny to the application. But authorities that are not self-evidently sledge-hammers, such as the authority to navigate around an entire window once you've gotten access to any individual panel, are far too subtle for the Java Security Manager to grasp. A malicious renderer could, with only a couple simple lines of code, take control of the browser's entire user interface, and gleefully spoof the user again and again, faster than the eye can blink.

-

As we see, none of these technologies is able to seriously come to grips with the crucial needs of POLA either separately or in combination. This is why computer security is in such a ghastly state of disrepair today. The answer is capabilities.

Capability Security

Capabilities are a natural and intuitive technology for implementing POLA. Capabilities can be thought of as keys in the physical sense; if you hand someone a key, you are in a single act designating both the object for which authority is being conveyed (the object that has the lock), and the authority itself (the ability to open the lock). Capabilities, like idealized physical keys, can only be gotten from someone who already has the key (i.e., the shape of the key cannot be successfully guessed, and a lockpick cannot figure it out either). Authority is delegated by handing someone a copy of the key. Revocation of a capability is comparable to changing the lock (though changing software locks is much easier than changing hardware). One might think that making individual keys for every object that could possibly convey authority in a computer program would be unwieldy. But in practice, since the authority is being conveyed by the reference (designation) to the object itself, and since you have to hand out the reference anyway for someone to use the object, it turns out to be quite simple. In most circumstances the conveyance of authority with the reference makes the authority transfer invisible, and "free". Finally, because capabilities naturally ubiquitously implement POLA, an emergent property of capability-confined software is defense-in-depth: acquisition of one capability does not in general open up a set of exploits through which additional capabilities can be acquired. The implementation of capabilities that is today closest to production deployment is the E Programming Platform.

E Programming Platform

The E Programming Platform, at the heart of which lies the E programming language, is specifically designed to support capability security in both local and distributed contexts. E is an open source system [Raymond99], and the draft book E in a Walnut [Stiegler00] is the principal guide for practical E programming. E is still a work in progress. Indeed, the bulk of the work done to complete the DarpaBrowser project was fleshing out parts of the E architecture needed by the browser environment. But operational software has been written and deployed with E. E is not yet feature complete, but the features it does have are robust.

Preparation for the Experiment

-

Building CapDesk

We started with the eDesk point-and-click, capability secure distributed file manager that was the first serious E program used for production operations. We augmented eDesk with a rudimentary capability-confining launching system adequate for launching basic document-processing applications and a basic Web Browser. Adding the launching system turned eDesk into a rudimentary capability secure desktop, i.e., CapDesk.

Key components of the launching system include

-

Point-and-click installation module that negotiates endowments on the behalf of the confined application. An endowment is an authority automatically granted to the application at launch time. Basic document processors only need user-agreed upon name and icon for their windows, used to prevent window forgery. Web browsers work most simply if endowed with network protocols, specifically, in this case, HTTP protocol.

-

Powerbox module that manages authority grant and revocation on behalf of a confined application. The powerbox launches the app, conveys the authorities endowed at installation, and negotiates with the user on the application's behalf for additional authorities during execution.

-

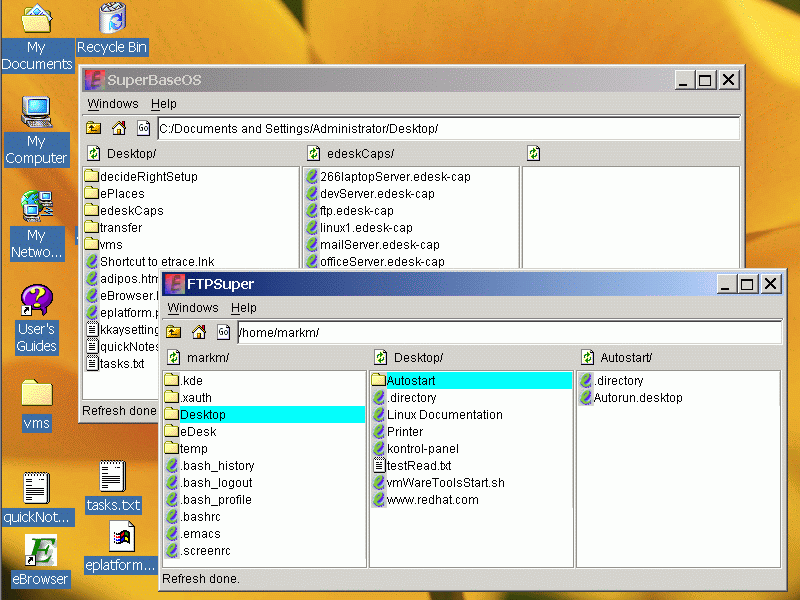

CapDesk operating as a capability secure, point-and-click, distributed file manager. In this example, CapDesk has one window open on a Windows computer, and another window open on a Linux computer. The user can copy/paste files and folders back and forth between the file systems, and directly run capability confined applications (such as CapEdit) on files on any part of a file system to which CapDesk has been granted a capability.

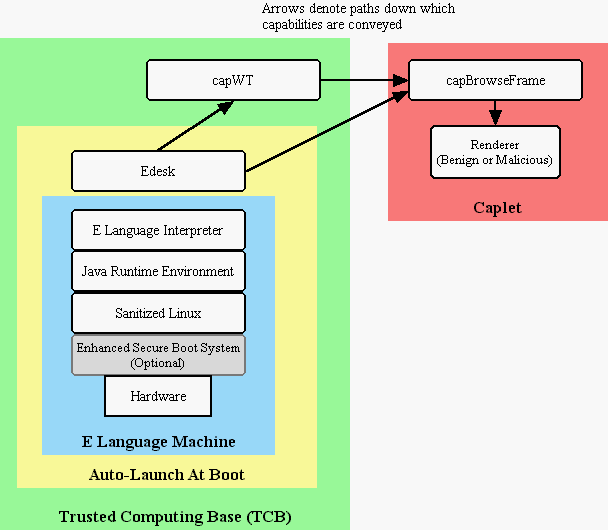

Building the E Language Machine

With CapDesk expanded to be able to launch capability confined applications, it became possible to build an E Language Machine (ELM). An ELM is the world's first general-purpose point-and-click computing platform that is capability-secure and invulnerable to traditional computer viruses and trojan horses. It is built by running a CapDesk on top of a Linux core; the CapDesk effectively seals off the underlying layers of software (Linux, Java, and E Language, which together with CapDesk comprise the Trusted Computing Base (TCB) which has full authority over the system) from the applications that are running (only capability confined applications can be launched from CapDesk, so only capability confined apps can run).

-

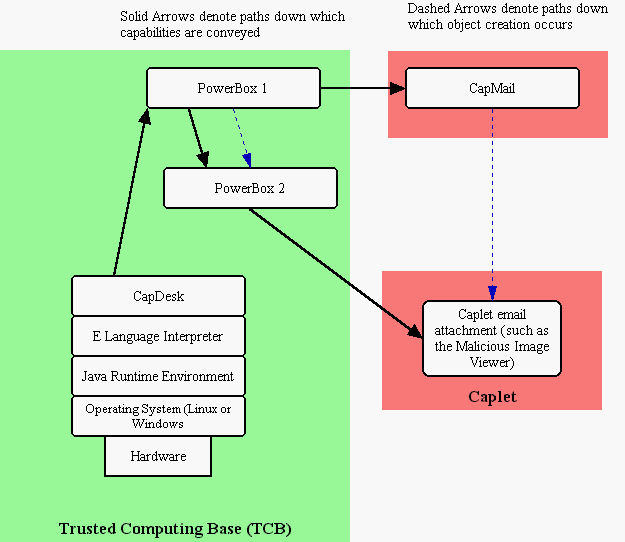

Architecture of the E Language Machine. A Linux kernel launches a Java virtual machine, which launches an E Language Interpreter, which launches a CapDesk. CapDesk seals off the underlying non-capability elements of the Trusted Computing Base from capability-confined applications launched from CapDesk. In this sample scenario, a hypothetical capability-confined mail tool is running, and the user has double-clicked on an email attachment. The attachment in run in its own unique trust/authority realm, making it unable to engage in the usual practices of computer viruses, i.e., reading the mail address file and connecting to the Internet to send copies of itself to the user's friends.

Building the DarpaBrowser

A web browser needs surprisingly few authorities considering the amount of value it supplies to its users. A simple browser needs little more than the authority to talk HTTP protocol. Since the DarpaBrowser was itself designed as a capability confined application, this meant that the browser never had very much authority available for the renderer to steal if the renderer somehow managed to totally subvert the browser. As later observed by the security review team in their report,

Withholding capabilities from the CapBrower is doing it a favor: reducing the powers of the CapBrower means that the CapBrowser cannot accidentally pass on those powers to the renderer, even if there are bugs in the implementation of the CapBrowser.

-

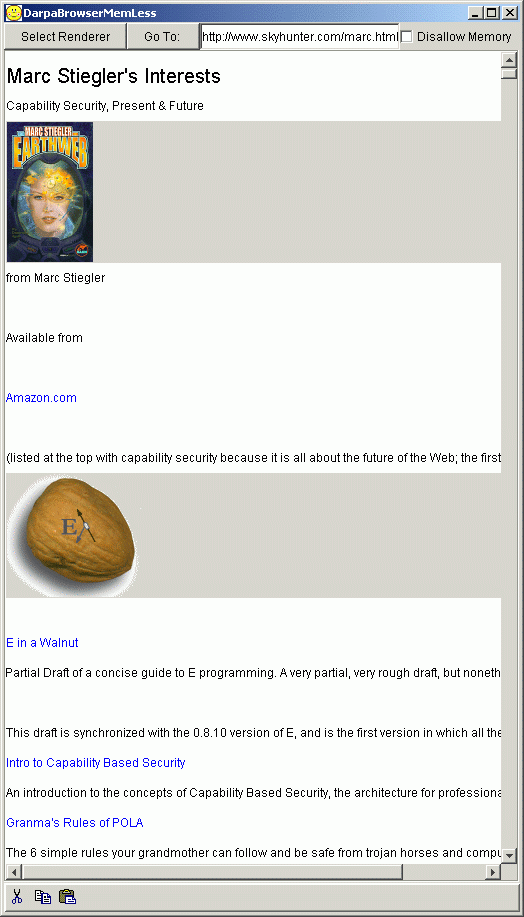

One of the security goals was to prevent the renderer from displaying any page other than the current page specified by the browser. While capability confinement was trivially able to prevent the browser from going out and getting URLs of its own choice, there was one avenue of page acquisition that required slightly more sophistication to turn off: if the renderer were allowed to have a memory, it could show information from a previously-displayed page instead of the new one. Therefore, the renderer had to be made "memoryless".

With capabilities as embodied in E, one straightforward way of achieving memorylessness is to throw the renderer away and create a new one each time the user moves to a new page. This was the strategy used in the DarpaBrowser.

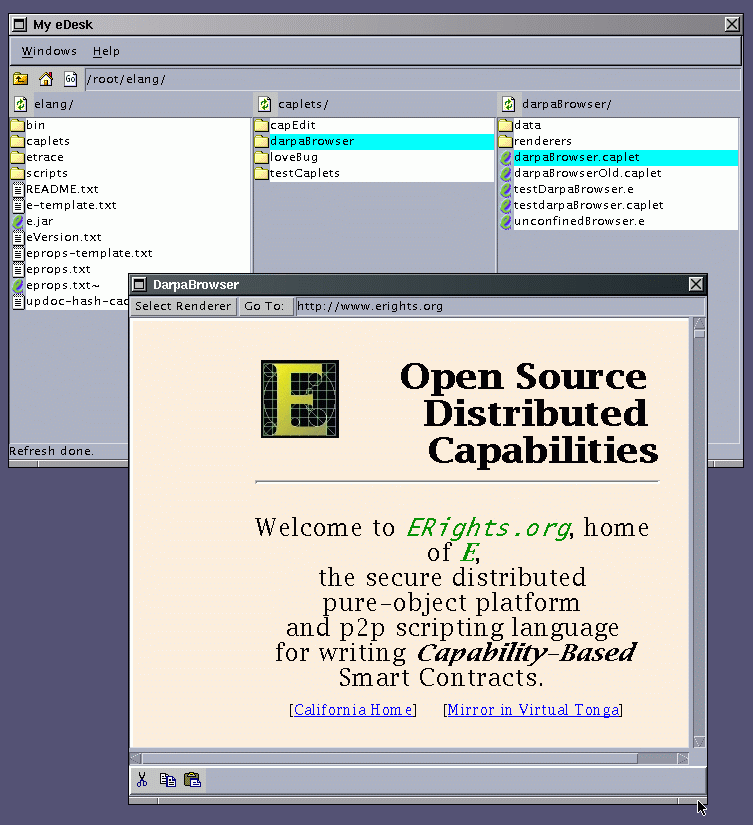

An ELM with CapDesk and DarpaBrowser. The DarpaBrowser in this image is running the Benign Renderer, based on the JEditorPane widget of the Java Swing Library; this widget directly renders HTML.

Building the Renderers

According to the proposal originally presented for the DarpaBrowser, only two renderers would be built: a benign renderer, and a malicious renderer. As the project proceeded, it became clear that these were inadequate to test all the principles we wished to assess. A "text renderer" was built in time for the experiment. This renderer simply presents the text of the page on the screen, creating a "source view". This renderer was able to present any HTML document, no matter how badly the HTML has been written by the page author.

In the aftermath of the review, an additional renderer was built, the CapTreeMemless renderer, as described later.

Taming Swing/AWT

A key part of the effort of preparing for the experiment was taming the Java API, in particular, taming the AWT and Swing user interface toolkits. The act of taming involves applying a thin surface to a non-capability API that drives interactions into a capability-disciplined model. The Java API is not designed for capability security, yet contains an enormous amount of valuable functionality that cannot be easily rewritten from scratch. It turned out that the taming approach was in general adequate to make this API useable. Frequently, taming involves nothing more than suppressing "convenience" methods, i.e., methods that convey authority that programmers already have. Let us give a simple and a complex example:

As a simple example, given any Swing user interface widget, one can recursively invoke "getParent" on the widget and its ancestors until a handle on the entire window frame is acquired. A malicious renderer could defeat, in a half dozen lines of code, the explicit goal of ensuring that the URL field and the page being displayed were in sync. Therefore the getParent method on the Component class must be suppressed to follow capability discipline.

As a complex example, the most ridiculous anti-capability subsystem we encountered in Swing was the Keymap architecture for JTextComponents. All JTextComponents in a java virtual machine share a single global root Keymap. Programmers can create their own local keymaps and add them as descendents from the global root Keymap, creating a tree of keymaps; the children receive and process keystrokes first, and can discard the keystroke before it reaches its parents. The part of this that is exquisitely awful is that it is possible to edit the global root keymap. Malicious software can trivially vandalize keystroke interpretation for the entire system. Even more maliciously, objects can eavesdrop on every keystroke, including every password and every confidential sentence that is typed by the user. This is not merely a violation of security confinement. This is a violation of the simplest precepts of object-oriented modularity. Not only is it trivially easy for malicious code to attack the system, it is trivially easy for the conscientious programmer to destroy the system by accident. Indeed, the way the DarpaBrowser team first identified this particular security vulnerability in Swing (long before initiating the actual taming process) was by accidentally destroying the keymap for the eBrowser software development environment.

To solve this problem, the methods that allowed the root keymap to be accessed had to be suppressed, and since child keymaps could not be integrated into the system without designating the parent, new constructors had to be created for keymaps that would, behind-the-scenes, attach the keymap to the root keymap if no other parent were specified. While the actual amount of code needed to tame this subsystem was small, more effort was needed to design the taming mechanism than would have been required by the JavaSoft Swing developers to create a minimally sensible object-oriented design in the first place.

Fortunately, the bulk of Swing is well-designed from an object-oriented perspective, which is what made it possible for the taming strategy to work well. Had the keymap subsystem not been an aberration, rewriting the user interface toolkit from lower-level primitives would have been more cost-effective despite the enormous costs such an undertaking would have entailed.

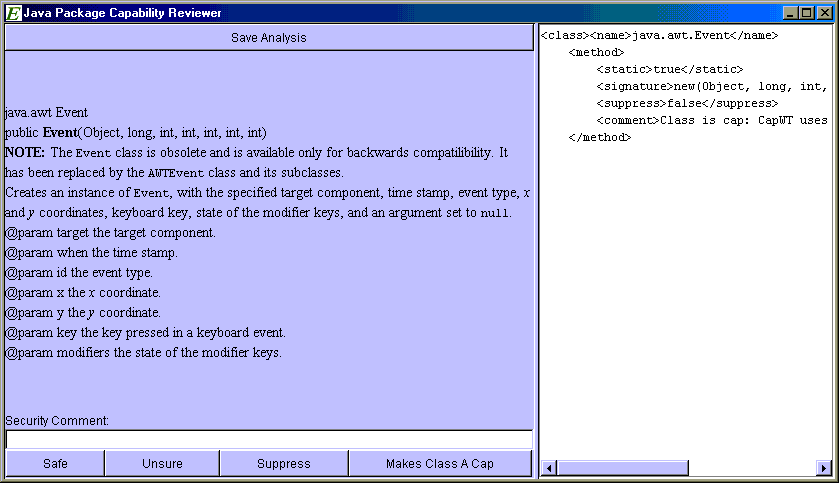

The AWT/Swing API is an enormous bundle of classes and methods. A substantial portion of the entire research effort went into this taming effort, including writing two versions of the CapAnalyzer (see picture) tool to support the human tamer in his efforts. The approach taken in this first attempt at taming was to be conservative, i.e., to shut off everything that might have a security risk associated with it, and enable only things that were well understood.

-

Version 1 of the E Capability Analyzer. The human analyst is walked through all the classes in a Java package, given the Javadoc from the API for that class, and allowed to individually suppress methods, and mark the class "safe" (i.e., it confers no authority), or "unsafe" (and must be explicitly granted to a confined module from another module that has the authority).

Limitations On the Approach

-

Before plunging into the experiment and its results, we should carefully note the limitations on the approach taken in the DarpaBrowser effort.

-

Goal Limitations: As explicitly noted in the original proposal, Denial of Service attacks were out of scope, as were information leaks. The goal was to prevent the renderer from gaining authorities, such as the power to reset the clock or delete files. While as a practical matter the most dangerous kinds of information leaks were also prevented by capability confinement, some types of leaks, such as covert channels, were not even challenged, much less prevented. For more information about the details about the goals, see the Project Plan in the Appendix.

-

ELM Limitations: The ELM is the world's first capability secure computing platform with a point-and-click user interface. It is a remarkable by-product of building the DarpaBrowser. We would be remiss not to note, however, that it has significant disadvantages compared to a true capability-secure operating system. Notably, the TCB is extremely large. The size of the TCB attracts risk of embodying security vulnerabilities. Furthermore, the architectural complexity of this TCB probably makes it too ungainly ever to pass a full security audit. Despite these limitations, however, the ELM still represents a substantial leap forward in the integration of security, flexibility, and usability.

-

Benign Renderer Limitations: During the last days leading up to the experiment, it became clear that the benign renderer in particular was a weak experimental platform. This renderer was built, as specified in the proposal, using the Swing HTML widget. This gave us a professional-looking rendering at extremely little cost. One disappointment was that this HTML widget was extremely fickle about the HTML it would accept; as a consequence, very few pages on the Web will actually render through it.

However, for our experimental purposes, this was not the major failing. More serious was that this widget used authority conveyed to it as a part of the Trusted Computing Base for much of its interaction with web pages. Consequently, the benign renderer exercised almost none of the authority confinement elements of the Browser: being a TCB HTML widget, it would just go out to the Web and get its own images based on the textual string of the URL, for example, without having to negotiate with the browser for actual authority. This was the reason we built the text renderer, as a proof that "real" rendering could be done without using TCB powers.

The Experiment

-

A snapshot was taken of the E/CapDesk system to use as a baseline for the security review. This baseline version of the system can be found at http://www.erights.org/download/0-8-12/index.html

The reviewers were given documentation on the system, goals, and infrastructure well in advance of the actual review, so that they could arrive with a reasonable familiarity and start fast.

For five consecutive days, the review team and the development team ate, slept, and drank DarpaBrowser security. On the first day the overall architecture was reviewed, and the schedule was made out for all the successive days of the review. The schedule ensured that no pieces of the system with signficant security sensitivities were excluded. Actual selection of what to review, how to review it, and how long to take reviewing it, were strictly driven by the reviewers; the development team assisted in every way possible, but they were only there to assist. Everyone took notes, and those notes were merged on the final day.

After the week of in-depth scrutiny, the review team wrote the security report that can be found in the Appendix.

Results

-

The written security review can be read in the Appendix. Anyone interested in the details of the results is encouraged to read the full report. To be extremely brief, the results were in line with our expectations: For the security goals specified in the project plan (which included goals from the original DARPA solicitation and our original proposal), we did find a number of security vulnerabilities (twenty-one in total) in our first implementation of the DarpaBrowser. Most of these vulnerabilities were simple programming errors that were easy to correct. Two of them have proven to be too hard/too unimportant/too irrelevant to the future development path of the E platform to fix within the limits of this contract; these two are described in detail the Post Experiment Development section below. However, not even the two unfixed vulnerabilities expose flaws in the fundamental capability security architecture. All can be straightforwardly repaired once the capability paradigm is embraced.

The crucial outcome of the experiment was of course the lessons learned, which are detailed in the next section. But for completeness' sake, we mention here an issue found in the memoryless version of the DarpaBrowser. By toggling the "Allow Memory" box on and off while browsing the Web, it becomes clear that the browser suffers a significant performance penalty when the renderer is made memoryless by creating a new renderer instance each time a new page is loaded. The performance analysis tools available with E at this time are too crude for us to state a conclusive explanation for this. We hypothesize that the problem lies in the way Java Swing discards and replaces subpanels. Regardless of whether the problem lies in Swing or in the current implementation of E, however, there is no reason that this should be an expensive operation, i.e., there is nothing about the capability paradigm that imposes a significant performance penalty for discarding objects, either within or across a trust boundary. We therefore do not say anything more about this discovery in this report, though it is another reason why we are eager to move from Swing to SWT, as described later.

Lessons Learned

-

General Truths

-

Acquisition of Dangerous/Inappropriate Authority Can Be Prevented by Capability Based Security. All the vulnerabilities found in the experiment can be easily remedied within the capability architecture. Indeed, it can be argued that these vulnerabilities can only be remedied in the capability architecture, as suggested by the second general truth:

-

Leaving the capability paradigm invites grave security risks. One of the two significant sources of vulnerabilities in the DarpaBrowser itself was the code for analyzing HTML. HTML is a simple text format that embodies implicit authority demands (for more detail, see the section below about HTML and the Confused Deputy problem). As a consequence, to deal with HTML--or to deal with any of the many other non-capability protocols now in use on the Web--you must depart from the capability paradigm long enough to process the protocol into a capability form. Through the DarpaBrowser investigation, we have learned just how important it is to enforce the following rules:

-

When you must leave the capability paradigm, get back as quickly (with as few lines of code) as possible.

-

Use the most rigorous techniques available for managing non-capability representations of authority.

-

Model the other forms of authority as capabilities whenever possible.

- In the DarpaBrowser, the strings embedded in the HTML that represent URLs were initially identified by using string matching. As discussed at length in the security review, this proved far too vulnerable. In the end an HTML parser was substituted for the string matcher, essentially eliminating this issue.

-

Taming a large API takes substantial resources. The amount of time and effort required to tame Java AWT/Swing was significantly underestimated. Even taking the conservative approach described earlier (to shut off parts of the API that were not well understood), the speed with which taming was performed was too great, introducing vulnerabilities. The security review team concluded that taming was the most significant overall risk to the E capability implementation strategy, and we concur. A whole new tool should be written to support taming, and considerably greater resources must be committed to complete the taming process in such a fashion that the security community can feel confident in the result. A draft specification for the taming support tool can be found in the Appendix.

-

Capability confinement can significantly improve the cost/benefit ratio of security reviews. By following the flow of authority down capability references, even without any tool except the human eye, one can quickly identify large sections of code that cannot possibly have dangerous authority and do not need security review. The ability to cut off the review at the point where capabilities ceased flowing appeared repeatedly in the course of the experiment as a substantial time savings. This strength of capabilities was highlighted by the use of the powerbox pattern discussed below, though this was far from the only place in which the technique played a powerful role.

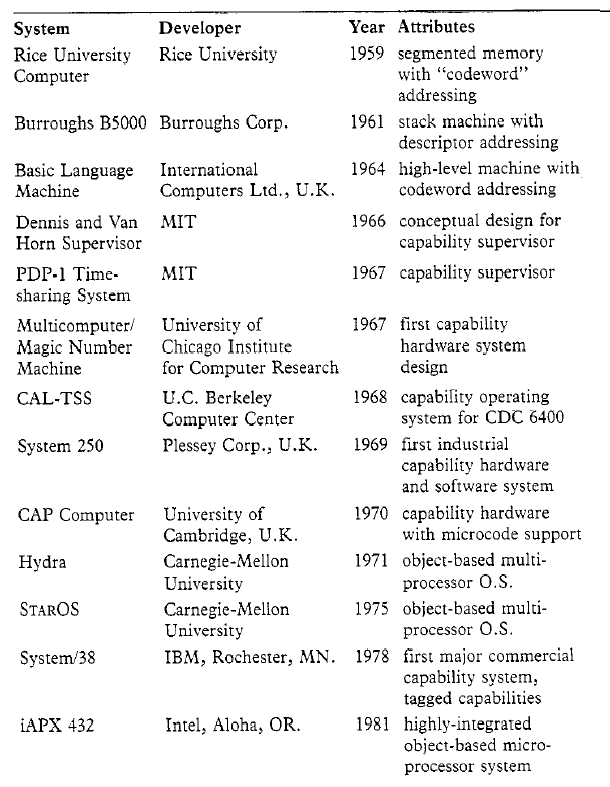

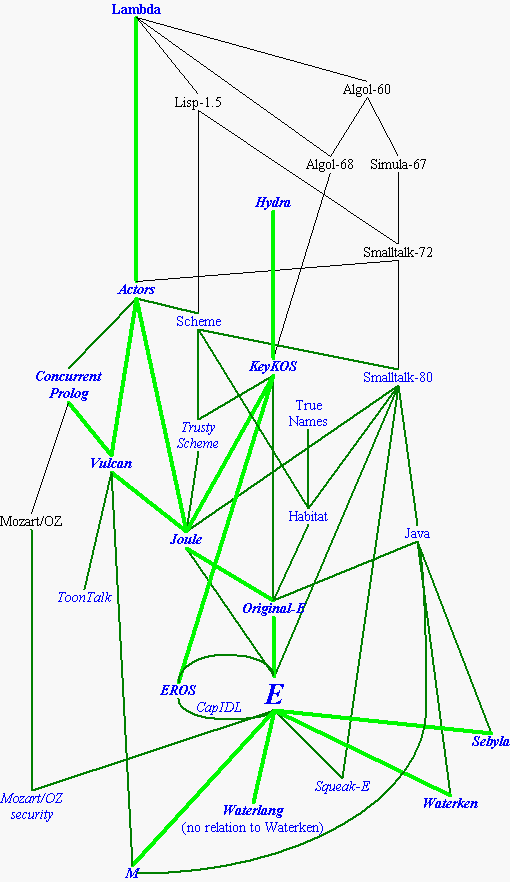

Significant opportunities for research in capability-based security patterns still exist. Capability based security has been known to the computer security field for decades as chronicled in [Levy84]; an update of Levy’s chronology of capability milestones can be found in the Appendices. However, a relatively small percentage of the resources spent in computer security have been invested in the capability paradigm. As a consequence, within the capability field lie rich veins of security innovations still waiting to be mined.

Capability-Based Secure Programming is, with a few key exceptions, little different from Object-Oriented Programming. As suggested by the JTextComponent Keymap example described in the Taming section earlier, capability secure designs have a great deal in common with clean, modular object-oriented designs. Often a clean modular design is all one needs to secure a subsystem; the same minimization of object reference that reduces risk of accident and simplifies maintenance also implements much of POLA. Ironically, one of the differences between objects and capabilities is that, for capabilities, one must be more rigorous about applying object-oriented modularity: while the non-security-aware programmer can trade off modularity against other goals (even if the other goals are bad, as exemplified by the keymap), the capability-secure programmer needs to enforce modularity discipline pervasively. This has a number of specific consequences, described in detail in the Specific Insights section below. With those exceptions, however, the object oriented programmer will find little difference between object-oriented programming and capability-based programming when using a capability-based language such as E.

Within a capability-confined realm, even horrifically poor, security-oblivious programming can do little or no harm. This is one of the lessons of the confinement of the malicious renderer. Even if every bit of the architecture and implementation of the malicious renderer abandoned both capability and object-oriented design, little harm could come of it. The worst it could do is render the HTML poorly, i.e., it could be broken. It could not, however, harm the DarpaBrowser or the underlying system. The designer of the interface from the browser to the renderer needs to have skill as a capability-oriented programmer, but programmers without any special training can write the bulk of the code in a typical (secure) system.

Specific Insights

-

The Powerbox Pattern is a significant new invention. The powerbox mediates authority grants for the confined module on behalf of the powerbox owner. If the module requests an authority with which the module has been endowed at creation, the authority is simply granted. If the module needs a new authority during operations, the powerbox negotiates with its owner for such authority. If the owner decides to change authorities during operations (either grants or revocations), the powerbox fulfills these changes.

The Powerbox security pattern proved to be a powerful ingredient in leveraging the security review resources for maximum productivity. It effectively collects the security issues at the boundary between a pair of trust realms into a small body of code. Consequently, the bulk of the code inside a single trust realm does not have to be reviewed for security issues. The vast majority of the CapDesk code went un-reviewed, yet we have reasonable confidence that no vulnerabilities were missed because of this decision.

This pattern was invented in the course of the DarpaBrowser research. We first developed the pattern for the CapDesk Powerbox, from which the pattern gets its name. The CapDesk Powerbox is the software module that mediates the granting of authorities to a capability confined application from the user. This pattern was reused (though incorrectly, as discussed in the General Truths earlier), at the interface between the DarpaBrowser and the renderer (embodied as the renderPGranter component). By following the now-well-understood pattern henceforth, future developers will be able to build more secure systems at less cost and with greater reliability. The Powerbox pattern is elaborated in the Appendix. -

HTML embodies the classic Confused Deputy security dilemma. HTML uses text to designate a page to be accessed without actually conveying the authority to access that page. Both the HREF attribute and the IMG tag are examples of places where the HTML text assumes the browser will use its own authority to fulfill the intent, not of the browser owner, but of the HTML author. This is the classic characterization of the Confused Deputy problem, which occurs when designation is separated from authority [Hardy88]. As a simple example, suppose a page from outside your firewall specifies a URL that is interpreted, inside your firewall, as connoting a particular page available inside the firewall. The page author beyond the wall almost certainly does not have authority to reach this page, but the browser does. In this circumstance, the HTML from that outside page, possibly written by an adversary and in collaboration with the malicious renderer, can use the browser's authority on its own behalf. This did not, as it turned out, violate any of the security goals of the project, but it is a disappointment.

Encountering the Confused Deputy problem was the proximate cause for Norm Hardy, the founder of modern capability thinking, to turn to capabilities in the first place. There is no solution except to totally embrace capabilities, by ubiquitously using capabilities not only in the browser, but in the HTML language itself.

It is possible to build a capability-oriented protocol derived from HTML that has many of the desireable properties of HTML, but which enables proper security enforcement. However, such a protocol would not be backwards-compatible with HTML (though note that one can layer a capability protocol on top of HTML [Close99]). Since this project explicitly called for working with HTML as it exists now, this line of research stopped when the problem had been identified. -

Event-loop models of concurrency have a synergistic relationship with capabilities for ensuring security. One of the more common causes of vulnerability is the Time Of Check To Time Of Use (TOCTOU) hole. In a TOCTOU vulnerability, a value is checked to confirm that it is valid, and then before it is used the malicious client changes it. TOCTOU vulnerabilities are exquisitely difficult to detect and fix in systems that use threads as part of their concurrency model, since the value change can happen in between the execution of individual lines of code. The E promise-based architecture, which puts a programmer-friendly face on event loops, guarantees that this path to sneaking in a change cannot occur. All the actions in a single event execute as an atomic operation. This greatly simplifies TOCTOU analysis, detection, and correction.

-

Drag/drop authority must be explicitly granted. Authority for drag/drop must be explicitly conferred as a launch-time grant, not as a safe non-authority conveying operation. At first glance, it would appear that being a drag source or a drop target is not authority conveying: after all, the user, in performing a drag and a drop, is engaging in the kind of explicit action that can be used in the capability paradigm to identify and convey appropriate authority. However, there is a subtle problem with automatically granting the authority to be a drag source or a drop target to all comers. If components from different trust realms are granted authority inside a single window frame (as in the DarpaBrowser), the differently trusted component can engage in spoofing: it can trick the user into believing it is a part of the main application, not a separate application that must be treated differently. For example, in the DarpaBrowser, if the renderer could designate itself as a drop target, a user drop of a file on the renderer's panel would enable the renderer to present the data in that file without informing the browser. At that point the browser's URL field would be out of sync with the page being displayed. This would explicitly breach the security goals of the project. It is fortunate for the overall E effort that the DarpaBrowser exercise was the context for making these first authority-distinguishing decisions, otherwise the E platform might well have had to make an upwards-compatibility break to close this vulnerability after it had been more extensively deployed.

-

Explicit Differences between Capability-Oriented Programming and Object-Oriented Programming include:

-

No static (global) public mutable state is allowed. The E programming language enforces this, since there are no static public mutables. This has a minor but real impact on system design: an object such as the java.lang.System.out object cannot be created.

-

Object instantiation sometimes requires more steps. In a capability system enforcing POLA, there tend to be more levels of instantiation for an object: the work normally done by a single powerful constructor will, as a part of POLA, often be broken into a series of partial constructors as the final user of the constructed object gets just enough power to perform local customizations of the object. An explicit recognition of this multiple-level instantiation is the Author pattern followed by many emakers (E library packages). An E constructor will often run in the scope of an Authorizer that is first created and granted the needed authorities; then the constructor itself can be handed to less-trusted objects without having to give the less-trusted object the authorities needed to make the constructor. The most complex current example of this pattern is the FrameMakerMakerAuthor in the CapDesk Powerbox. An individual Caplet is granted an individual frameMaker for making windows. To create the frameMaker, there is first an authorization step in which authority to create JFrames (the underlying Swing windows) is granted. Then an intermediate Maker step customizes the frameMaker with an unalterable caplet pet icon and pet name so that the caplet cannot use its power to make frames to spoof the user.

-

The Authorization step, and other intermediate levels of instantiation, can be disconcerting for the first-time capability programmer with an object-oriented background. It is, however, a simple extension of standard object-oriented practice. Indeed, between the start of this project and its completion we observed that the use of inner classes in Java has become increasingly ubiquitous in the example code from major vendors such as Sun and IBM. For the Java programmer who has become comfortable with these nested classes, the leap to multiple levels of instantiation is not even a speed bump, but more a pebble on the road. Meanwhile, the benefits of multi-layer POLA-oriented instantiation make it possible to be extremely confident, during debugging, that the majority of the library packages in a system could not have caused a surprising authority-requiring problem: one can see at a glance that the package did not have the authority, and move on to the next candidate for inspection.

-

Facets and Forwarders are common patterns and must be easily supported. In capability programming, on the boundaries between trust realms, facets and revokable transparent forwarders are often used to grant limited access to powerful objects. The frameMaker above is an example, it is a facet on the Swing JFrame that does not allow the icon or the title prefix to be changed. Fortunately, the E programming language makes the construction of facets and forwarders painless. This implements the following user interface rule that is older than programming, and indeed, older than the printed word: "If you want someone to do something the right way, make the right way the easy way." Forwarders and facets have been made very easy. In E, the code for a general-purpose constructor for revokable forwarders can be written in as little as six lines of code:

- def makeRevokableForwarderPair(obj)

:any {

- var innerObj := obj

- def forwarder {match [verb, args] {E call(innerObj, verb, args)}}

- def revoker {to revoke() {innerObj := null}}

- [revoker, forwarder]

- }

- var innerObj := obj

-

Encapsulation must be strictly enforced. As noted earlier, modularity discipline must be followed pervasively. It cannot be broken for convenience.

-

In capability style, there can be no unchecked preconditions on the client in a lesser trust realm. If a client does not fulfill the preconditions in a contract with an interface, and if the implementation of the interface does not check and detect this failure, the results are unpredictable. Such unpredictability is the enemy of security, and cannot be tolerated. In the context of E, a large part of this principle can be implemented through the following E-specific rule:

-

Rigorous guards should be imposed on arguments received across a trust boundary. Due to the nature of E semantics, a malicious component can send an object across the trust boundary which changes its nature as it is used, essentially spoofing the recipient. E has the most flexible and powerful dynamic type checking system yet devised for a programming language (using guards). However, to support rapid prototyping, these guards are optional in E. Therefore a best practice for E objects on the trust boundary is to impose the most restrictive guards possible on every argument received. Because of the DarpaBrowser experience, an experimental feature has been implemented for E that would, on a module-by-module basis, allow the developer to require guards on all variables in the module. A powerbox module, for example, should probably operate with this extra requirement imposed upon it.

Post-Experiment Developments

-

Closing Vulnerabilities

The major effort after the security review was to clean up as many of the security vulnerabilities identified in the review as possible for the final deliverable. The single most time consuming part of that effort was to alter the interface between the DarpaBrowser and its renderer to use a parse tree with embedded capabilities for describing the page to the renderer, rather than using text strings and raw URLs.

The DarpaBrowserMemless was the result of that effort. It uses parse trees rather than string matching, and embeds the correct capabilities in the parse tree handed to the renderer rather than playing a "guessing game" by trying to validate the url strings sent back to it from the renderer.

In addition to upgrading the benign and evil renderers to work with the new browser, a new renderer was built, the "capTreeMemless" renderer. This renderer addressed a desire expressed by the security review team, which was that at least one render that did not have special TCB authority be created that demonstrated that authority conveyance for items other than links, in particular images, operated correctly inside the capability paradigm.

CapTreeMemLess renderer at work. While not visually attractive, it does demonstrate the use of an HTML parse tree with embedded capabilities for rendering images and enabling links.

The status of all the vulnerabilities identified in the security review can be tracked through links embedded in the HTML version of the review found at http://www.combex.com/papers/darpa-review/index.html. These links tie directly into the bug tracking system for the E platform. As can be seen there, nineteen of the twenty-one vulnerabilities have been closed. The two vulnerabilities left unaddressed, and the reasons for leaving them unrepaired, are:

-

125505 Suppress show while allowing frame display: An application with minimal window-creation authority can, by creating a window, steal the focus from the currently active application, and possibly receive sensitive data being typed by the user before the user realizes that he is no longer working with the intended window. This vulnerability does not impact the DarpaBrowser's ability to confine the renderer (the render does not have window creation authority), but it is a true vulnerability that should be repaired. However, due to the way in which Swing bundles the window opening and window activation operations, it is not trivial to fix: the JFrame uses a single operation, show() to open the window, bring it to the front, and steal the focus. Simply suppressing the show() method is not one of the choices, since it is a required operation. We would still proceed to fix this problem (by building an experimental subclass of JFrame and re-implementing as much as it takes to unbundle the opening of the window from the stealing of the focus) if it were not for an additional development that occurred late in the course of the project: IBM brought out an alternative windowing kit, SWT, that can replace Swing and appears to be superior in many ways to Swing. SWT is described later in this report. Since we now tentatively plan to replace Swing with SWT for E programming, a major undertaking to build a better JFrame would be a waste of effort.

-

125503 Prevent backtrace revealing private data: A thrown exception could in principle carry sensitive information across a trust boundary. Once again, this does not effect the DarpaBrowser and its goals: the renderer is never in a position to receive sensitive data that it does not have more straightforward access to anyway (i.e., the browser may be used to read a sensitive page, in which case the renderer gets it directly anyway, for rendering). Furthermore, the description of this vulnerability as written in the security review has been found to be erroneous: the bug is both much less dangerous (it cannot leak authority) and much more difficult to fix than had been understood at the time of the review. As a consequence, we have allocated resources to more urgent requirements at this time.

-

Development of Granma's Rules of POLA

As a consequence of the demonstrability of the CapDesk/DarpaBrowser system, we have been able to present capability security principles to large audiences of people who previously would have found the topic too academic to appreciate. As people grasped that security really was possible, a few retreated into complaints that, except in very simple examples (like the current CapDesk/DarpaBrowser example), the management of the security features of the system would be too complicated for "normal" people to use. Fortunately, developing the CapDesk system gave us sufficient insight into the "normal security needs" for the "normal user", that we were able to develop a simple set of guidelines with the following features:

-

Can be quickly taught to people of only modest computer skill,

-

Allows people to get their work done easily and without barriers,

-

Nevertheless guarantees that effective security (for "normal" needs) is maintained.

-

Having been given a serious review by the E language community in the E language discussion group, these guidelines are now known as Granma's Rules of POLA. Their description can be found in the Appendix. Much research remains to be done in the area of making capability security user friendly, but this document points in a promising direction.

Introduction of SWT

In the closing months of this contract, a new technology came to our attention. IBM has released an alternative to JavaSoft's AWT/Swing user interface toolkit, the Standard Widget Kit (SWT). This widget kit has already been used for a very sophisticated user-interface application, the Eclipse software development environment. SWT has the following advantages over AWT/Swing:

-

SWT is much smaller than AWT/Swing. This has tremendous ramifications for the taming process, which was highlighted in the security review as the single greatest risk in the E platform. Reducing the size of the toolkit has a more-than-linear and critical impact on both feasibility and risk.

-

SWT engages the native widget kit at a higher level of abstraction than does Swing. As a consequence, applications written in Java/SWT really do look and feel exactly like any other application written specifically for the platform, since it is usually using the native widgets. The difference in attractiveness and comfort for the user is, all by itself, a compelling reason to switch. Java with SWT is an enormous step forward in the land of "Write once, run anywhere".

-

SWT uses a true open source license, unlike the Swing SCSL license. This has two important advantages: if it turns out during taming that simple taming is complicated and problem-laden (as with the JFrame grab-focus-on-opening behavior in Swing, described earlier), we have the option of simply modifying the problematic class rather than attempting a poor taming effort. The second advantage is that the license allows us to compile SWT into native and dot-net forms, allowing us to use a single standard toolkit across all three of the E implementations requested by the E user base: Java, native binary, and dot-net. This too would be, all by itself, a compelling reason to switch.

-

It uses a very different garbage collection strategy than Swing. We uncovered a significant garbage collection hole in Java for Windows and Linux (Java on the Mac seems fine) during the course of this effort. While everything worked well enough for the prototyping work done in this project, for a production environment this memory leak would be unacceptable. We believe the memory leak lies in Swing and its interface to the native graphics system. The SWT garbage collection strategy, while more primitive, is also more likely to work correctly. Once again, this all by itself would be a compelling reason to switch.

-

Our exploration of SWT has not yet progressed to the point where we are certain that it meets our needs for functionality and capability-compatible modularity, but it looks very promising. The Eclipse development environment is de facto evidence that the toolkit has extensive functionality, so it is unlikely that a problem will be identified in this realm. The capability-compatibility remains the greatest risk, though preliminary investigation has not identified any fatal problems (though we have identified one place, in the drag and drop formats supported, where more work will be required than is required in Swing).

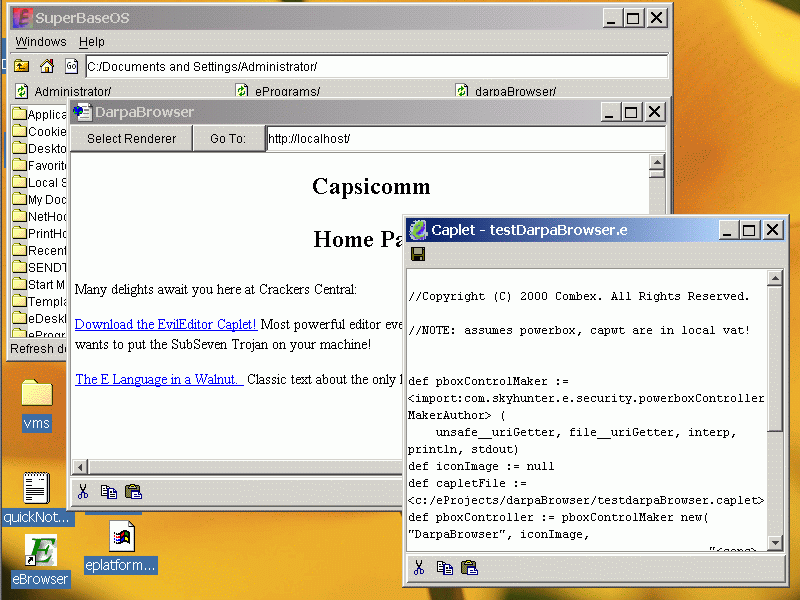

Assessment of Capabilities for Intelligent Agents and User Interface Agents

One of the enhancements made to the DarpaBrowser that went above and beyond the minimum requirements of the contract was the embedding of a caplet launching framework inside the browser itself. If the DarpaBrowser encounters a page whose url ends with “.caplet”, the browser not only renders the text from that page, but also launches a capability confined application from it. This application lives in a separate trust realm from the browser itself; it is guaranteed that the new caplet cannot use the browser’s authorities, and it is also guaranteed that the browser cannot use any authorities later granted to the new caplet.

The use of the DarpaBrowser to launch caplets from across the web in safety is a simple demonstration of the potential of capability security for enabling the development of a new generation of harmless yet powerful mobile software agents. Cooperating members of a community could grant sensible authorities (such as, the authority to read a document folder) to mobile agents confidently and painlessly. These software agents could delegate their authority to more evolved agents without either user intervention or user concern. There is no security or usability reason such agents could not evolve to the point where they, not the human beings, were doing the most work on the Web, informing us about results when they reached a threshold of value relevant to us.

Let us look at a simple example, the SETI screensaver project, in a capability context. The SETI screensaver is effectively an agent seeking computing resources. The authorities it needs are reasonably simple:

Authority to communicate with a single Web site (the SETI central coordination site)

Authority to write to the screen until a keystroke or mouse click occurs (i.e., an authority on a revokable forwarder to the display, with the forwarder automatically revoking itself on KeyPress or MouseDown)

These authorities run little risk of compromising the computer. Indeed, these authorities fall easily inside Granma’s Rules of POLA: running the SETI screensaver requires no breaking of the basic security principles. Yet the current SETI software, because it must run with standard Windows ambient authority, is as dangerous as the possibly-malicious renderer in the DarpaBrowser. Large numbers of people who would otherwise run SETI will not do so because of the security implications. And the caution about allowing software agents to run on the individual home computer is necessarily multiplied by orders of magnitude if the desire is to run agents on large databases. As a consequence, we believe that capability security is not merely a good idea for software agents. We believe capability security is a requirement if this kind of computing is ever to achieve its destiny.

To support software agents properly, the capability management system embodied in the CapDesk Powerbox must be fleshed out to support all the different kinds of authority grants that make sense. A particular area where some research is required is in the general-purpose designation of authority to speak to other objects, as distinct from the authority to access system resources (the authority to read a directory is access to a system resource; the authority to talk to a third party spell checker requires a general-purpose object-to-object granting framework). But the principles have already been demonstrated. The road to a flexible capability framework is generally smooth, with only a few twists and turns remaining before software agents can be properly and fully supported.

Conclusions

-

Capability based security enables software developers to achieve computer security goals that cannot be reached with conventional security systems. The capability paradigm also enables more cost-effective security reviews that can provide better confidence that these security goals have been achieved. Furthermore, early indications are that these security goals can be achieved with neither undue hindrance of the user, nor with noticeable constriction of the functionality of the computing platform. The user-friendly power of capability security is demonstrated in the picture below.

-

In this picture four different trust realms interact flexibly, securely, and in a user-friendly fashion. The CapDesk in the background is running with TCB authority; the DarpaBrowser is in a confined trust realm with only an HTTP protocol authority; the renderer inside the browser is running in a trust realm with only access to a single window panel, and a single URL at any given time as specified by the browser; the text-editing Caplet is running in a trust realm with read/write authority to a single file. The applications all look and feel like ordinary unconfined applications; no passwords are needed, no certificates need to be studied for validity; interaction between the trust realms proceeds smoothly and intuitively, but only at the behest of the user, never under the control of the less-trusted applications.

However, even with the power of capability security, truly securing our computers from cyberattack is hard work. In particular, the core infrastructure--components such as CapDesk, the Powerbox and the E Language itself--must be developed by seasoned capability security professionals, and must be reviewed by peers of equal skill. We suggest that, for this reason among others, such infrastructure needs to be built under public scrutiny, using open source licenses, and that professional security reviews are still a crucial part of the process of building secure systems.

Nonetheless, one of the truly remarkable powers capability security gives us is the ability to turn the bulk of the work in building secure systems over to people who have no security expertise whatsoever. Indeed, untrusted developers (even professional crackers!) can build large parts of the most sensitive computing systems. How can this be? It can be because any module that does not receive powerful authorities can be written by anyone in the world with no security consequences. This is explicitly demonstrated by the Malicious Render's inability to achieve authority-stealing security penetrations.

Capability patterns of software modularization as simple as the basic E module mechanism (which grants no authority whatsoever upon importation) and as sophisticated as the Powerbox developed to confine the DarpaBrowser can isolate untrusted subsystems, be they modules written by subcontractors of the British government or agents of the Chinese intelligence services. While we do not expect to see our military depending on the Chinese government for sensitive software development any time soon, this scenario demonstrates the power of the capability paradigm, and the brightness of the future in which capabilities become ubiquitous.

-

References

[Bishop79] Matt Bishop, "The Transfer of Information and Authority in a Protection System", in Proceedings of the 7th ACM Symposium on Operating Systems Principles, published as Operating System Review, vol. 13, #4, 1979, pp 45-54.

[Boebert84] W. E. Boebert, "On the Inability of an Unmodified Capability System to Enforce the *-Property", in Proceedings of the 7th DoD/NBS Computer Security Conference, 1984.

[Chander01] Ajay Chander, Drew Dean, John Mitchell, "A State Transition Model of Trust Management and Access Control", 14th IEEE Computer Security Foundations Workshop, Online at citeseer.

[Close99] Tyler Close, "Announcing Droplets", 1999. email archived at http://www.eros-os.org/pipermail/e-lang/1999-September/002771.html.

[Dennis66] Jack Dennis, E. C. van Horn, "Programming Semantics for Multiprogrammed Computations", in Communications of the ACM, vol. 9, pp. 143-154, 1966.

[Donnelley81] Jed E. Donnelley, "Managing Domains in a Network Operating System" (1981) Proceedings of the Conference on Local Networks and Distributed Office Systems, pp. 345-361. Online at http://www.nersc.gov/~jed/papers/Managing-Domains/.

[Ellison99] Carl Ellison, Bill Frantz, Butler Lampson, Ron Rivest, B. Thomas, and T. Ylonen, "SPKI Certificate Theory" IETF RFC 2693. Online at http://www.ietf.org/rfc/rfc2693.txt.

[Gong89] Li Gong, "A Secure Identity-Based Capability System", IEEE Symposium on Security and Privacy, 1989. Online at citeseer

[Granovetter73] Mark Granovetter, "The Strength of Weak Ties", in: American Journal of Sociology (1973) Vol. 78, pp.1360-1380.

[Hardy85] Norm Hardy, "The KeyKOS Architecture", Operating Systems Review, September 1985, pp. 8-25. Updated at http://www.cis.upenn.edu/~KeyKOS/OSRpaper.html.

[Hardy86] Norm Hardy, "U.S. Patent 4,584,639: Computer Security System", Key Logic, 1986 (The "Factory" patent), Online at http://www.cap-lore.com/CapTheory/KK/Patent.html.

[Hardy88] Norm Hardy, "The Confused Deputy, or why capabilities might have been invented", Operating Systems Review, pp. 36:38, Oct., 1988, http://cap-lore.com/CapTheory/ ConfusedDeputy.html.

[Harrison76] Michael Harrison, Walter Ruzzo, Jeffrey Ullman., "Protection in Operating Systems", Comm. of ACM, Vol. 19, n 8, August 1976, pp.461-471. Online at http://www.cs.fiu.edu/~nemo/cot6930/hru.pdf.

[Hewitt73] Carl Hewitt, Peter Bishop, Richard Stieger, "A Universal Modular Actor Formalism for Artificial Intelligence", Proceedings of the 1973 International Joint Conference on Artificial Intelligence, pp. 235-246.

[Jones76] A. K. Jones, R.J. Lipton, Larry Snyder, "A Linear Time Algorithm for Deciding Security", in Proceedings of the 17th Symposium on Foundations of Computer Science, Houston, TX, 1976, pp 33-41.

[Kahn87] Kenneth M. Kahn, Eric Dean Tribble, Mark S. Miller, Daniel G. Bobrow: "Vulcan: Logical Concurrent Objects", in Research Directions in Object-Oriented Programming, MIT Press, 1987: 75-112. Reprinted in Concurrent Prolog: Collected Papers, MIT Press, 1988.

[Kahn96] Kenneth M. Kahn, "ToonTalk - An Animated Programming Environment for Children", Journal of Visual Languages and Computing in June 1996. Online at ftp://ftp-csli.stanford.edu/pub/Preprints/tt_jvlc.ps.gz. An earlier version of this paper appeared in the Proceedings of the National Educational Computing Conference (NECC'95).

[Kain87] Richard Y. Kain, Carl Landwehr, "On Access Checking in Capability-Based Systems", in IEEE Transactions on Software Engineering SE-13, 2 (Feb. 1987), 202-207. Reprinted from the Proceedings of the 1986 IEEE Symposium on Security and Privacy, April, 1986, Oakland, CA; Online at http://chacs.nrl.navy.mil/publications/CHACS/Before1990/1987landwehr-tse.pdf.

[Karp01] Alan Karp, Rajiv Gupta, Guillermo Rozas, Arindam Banerji, "Split Capabilities for Access Control", HP Labs Technical Report HPL-2001-164, Online at http://www.hpl.hp.com/techreports/2001/HPL-2001-164.html.

[Lampson71] Butler Lampson, "Protection", in Proceedings of the Fifth Annual Princeton Conference on Informations Sciences and Systems, pages 437-443, Princeton University, 1971. Reprinted in Operating Systems Review, 8(l), January 1974. Online at citeseer.

[Levy84] Henry Levy, "Capability-Based Computer Systems", Digital Press, 1984. Online at http://www.cs.washington.edu/homes/levy/capabook/.

[Miller00] Mark S. Miller, Chip Morningstar, Bill Frantz, "Capability-based Financial Instruments", in Proceedings of Financial Cryptography 2000, Springer Verlag, 2000. Online at http://www.erights.org/elib/capability/ode/index.html.

[Morningstar96] Chip Morningstar, "The E Programmer's Manual", Online at http://www.erights.org/history/original-e/programmers/index.html. (Note: The "E" in the title and in this paper refers to the language now called "Original-E".)

[Raymond99] Eric Raymond, "The Cathedral and the Bazaar", O'Reilly, 1999, Online at http://www.tuxedo.org/~esr/writings/cathedral-bazaar/.

[Rees96] Jonathan Rees, "A Security Kernel Based on the Lambda-Calculus", (MIT, Cambridge, MA, 1996) MIT AI Memo No. 1564. Online at http://mumble.net/jar/pubs/secureos/.

[Saltzer75] Jerome H. Saltzer, Michael D. Schroeder, "The Protection of Information in Computer Systems", Proceedings of the IEEE. Vol. 63, No. 9 (September 1975), pp. 1278- 1308. Online at http://cap-lore.com/CapTheory/ProtInf/.

[Sansom86] Robert D. Sansom, D. P. Julian, Richard Rashid, "Extending a Capability Based System Into a Network Environment" (1986) Research sponsored by DOD, pp. 265-274.

[Shapiro83] Ehud Y. Shapiro, "A Subset of Concurrent Prolog and its Interpreter". Technical Report TR-003, Institute for New Generation Computer Technology, Tokyo, 1983.

[Shapiro99] Jonathan S. Shapiro, "EROS: A Capability System", Ph.D. thesis, University of Pennsylvania, 1999. Online at http://www.cis.upenn.edu/~shap/EROS/thesis.ps.

[Shapiro00] Jonathan Shapiro, "Comparing ACLs and Capabilities", 2000, Online at http://www.eros-os.com/essays/ACLSvCaps.html.

[Shapiro01] Jonathan Shapiro, "Re: Old Security Myths Continue to Mislead", email archived at http://www.eros-os.org/pipermail/e-lang/2001-August/005532.html.

[Sitaker00] Kragen Sitaker, "thoughts on capability security on the Web", email archived at http://lists.canonical.org/pipermail/kragen-tol/2000-August/000619.html.

[Snyder77] Larry Snyder, "On the Synthesis and Analysis of Protection Systems", in Proceedings of the 6th ACM Symposium on Operating System Principles, published as Operating Systems Review vol 11, #5, 1977, pp 141-150.

[Stiegler00] Marc Stiegler, "E in a Walnut", Online at http://www.skyhunter.com/marcs/ewalnut.html.

[Tanenbaum86] Andrew S. Tanenbaum, Sape J. Mullender, Robbert van Renesse, "Using Sparse Capabilities in a Distributed Operating System" (1986) Proc. Sixth Int'l Conf. On Distributed Computing Systems, IEEE, pp. 558-563. Online at ftp://ftp.cs.vu.nl/pub/papers/amoeba/dcs86.ps.Z.

[Tribble95] Eric Dean Tribble, Mark S. Miller, Norm Hardy, Dave Krieger, "Joule: Distributed Application Foundations", Online at http://www.agorics.com/joule.html, 1995.

[Wagner02] David Wagner & Dean Tribble, "A Security Analysis of the Combex DarpaBrowser Architecure", Online at http://www.combex.com/papers/darpa-review/.

[Wallach97] Dan Wallach, Dirk Balfanz, Drew Dean, Edward Felten, "Extensible Security Architectures for Java", in Proceedings of the 16th Symposium on Operating Systems Principles (Saint-Malo, France), October 1997. Online at http://www.cs.princeton.edu/sip/pub/sosp97.html.

[Yee02a] Ka-Ping Yee, "User Interaction Design for Secure Systems", Berkeley University Tech Report CSD-02-1184, 2002. Online at http://www.sims.berkeley.edu/~ping/sid/uidss-may-28.pdf.

[Yee02b] Ka-Ping Yee, Mark Miller, "Auditors: An Extensible, Dynamic Code Verification Mechanism", Online at http://www.sims.berkeley.edu/~ping/auditors/auditors.pdf.

-

Appendices

Table of contents

- Table of Contents i

Executive Summary 1

DarpaBrowser Capability Security Experiment 4

Appendix 1: Project Plan 36

- Introduction and Overview 36

- Hypotheses 36

- Experimental Setup 37

- Proof of Hypotheses 38

- Demonstrations 39

Appendix 2: DarpaBrowser Security Review 40

- Introduction 40

- 1. DarpaBrowser Project 40

- 1.1 General goals 40

- 2. Review Process 43

- 3. Their Approach 44

- 3.1 Capability model 44

- 3.2 Security Boundaries 46

- 3.3 Non-security Elements that Simplified Review 47

- 3.2 Security Boundaries 46

- 4. Achieving Capability Discipline 48

- 4.1 UniversalScope 49

- 4.2 Taming the Java Interface 50

- 4.3 Security holes found in the Java taming policy 52

- 4.4 Risks in the process for making taming decisions 56

- 4.5 E 57

- 4.6 Summary review of Model implementation 59

- 4.2 Taming the Java Interface 50

- 5. DarpaBrowser Implementation 60

- 5.1 Architecture 60

- 5.2 What are all the abilities the renderer has in its bag. 61

- 5.3 Does this architecture achieve giving the renderer only the intended power? 62

- 5.4 CapDesk 62

- 5.5 PowerboxController 62

- 5.6 Powerbox 62

- 5.7 CapBrowser 63

- 5.8 RenderPGranter 63

- 5.9 JEditorPane (HTMLWidget) 66

- 5.10 Installer 66

- 5.2 What are all the abilities the renderer has in its bag. 61

- 6. Risks 66

- 6.1 Taming 66

- 6.2 Renderer fools you 67

- 6.3 HTML 69

- 6.4 HTML Widget complexity 69

- 6.2 Renderer fools you 67

- 7. Conclusions 70

Appendix 3: Draft Requirements For Next Generation Taming Tool (CapAnalyzer) 72

Appendix 4: Installation Procedure for building an E Language Machine 74

- Introduction 74

- Installation Overview 74

- Step One: Install Linux 75

- Step Two: Install WindowMaker, Java, and E 76

- Step Three: Secure Machine Against Network Services 78

- Step Four: Configure WindowMaker/CapDesk Startup 78

- Step Five: Confined Application Installation and Normal Operations 79

- Step Six: Maintenance 82

- Step Two: Install WindowMaker, Java, and E 76

- In Case Of Extreme Difficulty

82

Appendix 5: Powerbox Pattern 83

Appendix 6: Granma's Rules of POLA 88

- Granma's Rules 89

- Excerpt from Original Email, Granma's Rules Of POLA 89

Appendix 7: History of Capabilities Since Levy 92

Appendix 1: Project Plan

Project Plan

Capability Based Client

Combex Inc.

BAA-00-06-SNK; Focused Research Topic 5

- Technical Point of Contact:

- Marc Stiegler

- marcs-at-skyhunter.com

- Marc Stiegler

-

- Introduction and Overview

-

Capability security is Combex will develop a capability-secure Web browser that confines its rendering engine so that, even if the rendering engine is malicious, the harm which the renderer can do is severely limited.

Hypotheses

-

We hypothesize that capability secure technology can simply and elegantly implement security regimes, based on the Principle of Least Authority, that cannot be achieved with orthodox security architectures including firewalls and Unix Access Control Lists. Specifically, we hypothesize that capability security can confine the authority granted to an individual module of a Web Browser, the rendering engine, such that the rendering engine has no authority over any component of the computer upon which it resides except for:

authority over a single window panel inside the web browser, where it has full authority to draw as it sees fit

authority to request URLs from the web browser in a manner such that the web browser can confirm the validity of the request

authority to consume compute cycles for its processing operations.

We cannot directly test and prove that all theoretically possible authorities beyond these three are absent. Therefore, for experimental purposes, we invert our experimental basis to specifically prove that several other traditionally easy-to-access authorities are unavailable: We hypothesize that the rendering engine will not have any of the following specific authorities:

No authority to read or write a file on the computer's disk drives

No authority to alter the field in the web browser that designates the URL most recently retrieved